PyTorch

Published:

This lesson covers PyTorch Tutorial, https://pytorch.org/tutorials/beginner/basics/intro.html

Fashion-MNIST

- https://github.com/zalandoresearch/fashion-mnist

topic = "pytorch"

lesson = 1

from n import *

home, models_path = get_project_dir("FashionMNIST")

print_(home)

print_(models_path)

/home/naneja/datasets/n/FashionMNIST

/home/naneja/datasets/n/FashionMNIST/models

Labels = {0: "T-shirt/top",

1: "Trouser",

2: "Pullover",

3: "Dress",

4: "Coat",

5: "Sandal",

6: "Shirt",

7: "Sneaker",

8: "Bag",

9: "Ankle boot"

}

%matplotlib inline

import os

import pathlib

import matplotlib.pyplot as plt

import numpy as np

from tqdm import tqdm

import time

import torch

from torch import nn

from torchvision import datasets

from torchvision.transforms import ToTensor

from torch.utils.data import DataLoader

from IPython.display import display, HTML

device = "cuda:0" if torch.cuda.is_available() else "cpu"

print_(f"device={device}")

device = cuda:0

project = "FashionMNIST"

home = pathlib.Path.home()

home = os.path.join(home, "datasets", "n", project)

print_(f"home = {home}")

os.makedirs(home, exist_ok=True)

home = /home/naneja/datasets/n/FashionMNIST

training_data = datasets.FashionMNIST(root=home,

train=True,

download=True,

transform=ToTensor())

test_data = datasets.FashionMNIST(root=home,

train=False,

download=True,

transform=ToTensor())

msg = (f"len(training_data) = {len(training_data)} {tab}"

f"len(test_data) = {len(test_data)}"

)

print_(msg)

len(training_data) = 60000 len(test_data) = 10000

batch_size = 64

train_dataloader = DataLoader(training_data,

batch_size=batch_size,

shuffle=True)

test_dataloader = DataLoader(test_data,

batch_size=batch_size,

shuffle=True)

msg = (f"batch_size={batch_size}{tab}"

f"len(train_dataloader)={len(train_dataloader)}{tab}"

f"len(test_dataloader)={len(test_dataloader)}"

)

print_(msg)

batch_size = 64 len(train_dataloader) = 938 len(test_dataloader) = 157

# Images from dataloader

msg = (f"{len(train_dataloader.dataset)}{tab}"

f"{len(test_dataloader.dataset)}")

print_(msg)

60000 10000

for X, y in test_dataloader:

print_(f"X.shape={X.shape}{tab}y.shape={y.shape}")

break

X.shape = torch.Size([64, 1, 28, 28]) y.shape = torch.Size([64])

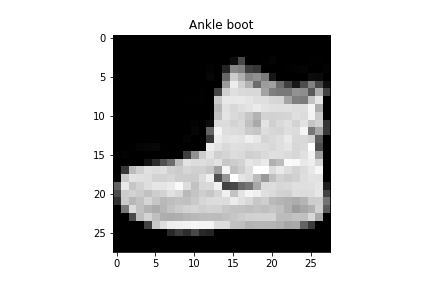

X, y = training_data[0][0], training_data[0][1]

img = X[0] # 28x28 torch.squeeze(X[0])

label = y

label = Labels[label]

plt.imshow(img, cmap="gray");

plt.title(label);

img_name = get_img_name(lesson)

plt.savefig(img_name)

insert_image(img_name, topic)

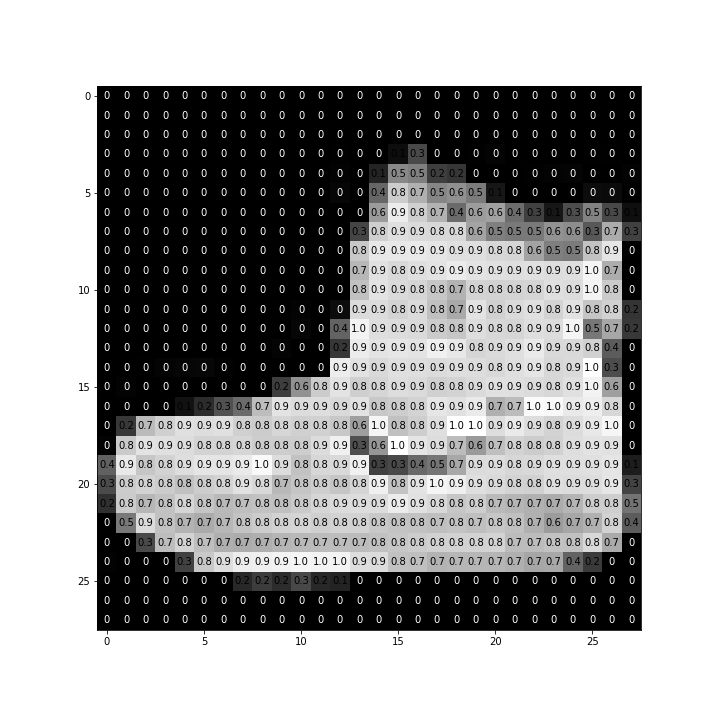

#img = np.squeeze(images[1])

fig = plt.figure(figsize = (10, 10))

ax = fig.add_subplot(111)

ax.imshow(img, cmap='gray')

width, height = img.shape

for c in range(width):

for r in range(height):

val = round(img[r][c].item(), 1)

font_color = "black"

if val == 0.0:

val = 0

font_color = "white"

ax.annotate(str(val),

xy=(c,r),

horizontalalignment='center',

verticalalignment='center',

color=font_color

)

img_name = get_img_name(lesson)

plt.savefig(img_name)

insert_image(img_name, topic)

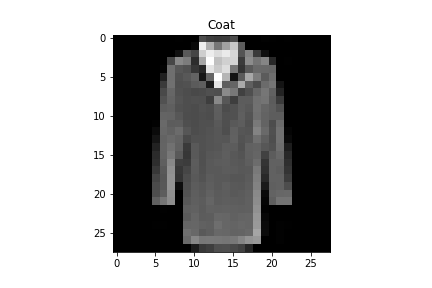

X, y = next(iter(train_dataloader)) # one batch

X, y = X[0], y[0] # One element from batch

print_(f"X.shape={X.shape}{tab}y={y}")

img = X[0] # 28x28 torch.squeeze(X[0])

label = y.item()

label = Labels[label]

plt.imshow(img, cmap="gray");

plt.title(label);

img_name = get_img_name(lesson)

plt.savefig(img_name)

insert_image(img_name, topic)

X.shape = torch.Size([1, 28, 28]) y = 4

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.layer1 = nn.Linear(28*28, 512)

self.relu = nn.ReLU()

self.layer2 = nn.Linear(512, 512)

self.layer3 = nn.Linear(512, 10)

def forward(self, x):

x = self.flatten(x)

x = self.layer1(x)

x = self.relu(x)

x = self.layer2(x)

x = self.relu(x)

x = self.layer3(x) # logits

model = NeuralNetwork()

model = model.to(device)

print(model)

NeuralNetwork(

(flatten): Flatten()

(layer1): Linear(in_features=784, out_features=512, bias=True)

(relu): ReLU()

(layer2): Linear(in_features=512, out_features=512, bias=True)

(layer3): Linear(in_features=512, out_features=10, bias=True)

)

# Define model

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10)

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

model = NeuralNetwork()

model = model.to(device)

print(model)

NeuralNetwork(

(flatten): Flatten()

(linear_relu_stack): Sequential(

(0): Linear(in_features=784, out_features=512, bias=True)

(1): ReLU()

(2): Linear(in_features=512, out_features=512, bias=True)

(3): ReLU()

(4): Linear(in_features=512, out_features=10, bias=True)

)

)

#!pip install modelsummary

#from modelsummary import summary

#summary(model, torch.zeros(1,28,28))

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=1e-3)

# Train one batch only

X, y_true = next(iter(train_dataloader))

X = X.to(device)

print_(f"X.shape={X.shape} {tab} y_true.shape={y_true.shape}")

y_pred = model(X)

print_(f"y_pred.shape={y_pred.shape}")

print_(f"y_pred[0]={y_pred[0]}")

X.shape = torch.Size([64, 1, 28, 28]) y_true.shape = torch.Size([64])

y_pred.shape = torch.Size([64, 10])

y_pred[0] = tensor([ 0.0323, 0.0208, 0.0066, 0.0843, 0.0040, 0.1255, 0.0641, -0.0660, -0.0725, -0.0335], device = 'cuda:0', grad_fn = <SelectBackward>)

a = np.arange(6).reshape(2,3) + 10

print(a)

# [[10 11 12]

# [13 14 15]]

# By default, the index is into the flattened array

print_(f"np.argmax(a)={np.argmax(a)}") # 5

# array([1, 1, 1])

print_(f"np.argmax(a, axis=0)={np.argmax(a, axis=0)}")

# array([2, 2])

print_(f"np.argmax(a, axis=1)={np.argmax(a, axis=1)}")

[[10 11 12]

[13 14 15]]

np.argmax(a) = 5

np.argmax(a, axis = 0) = [1 1 1]

np.argmax(a, axis = 1) = [2 2]

pred = [0.4, 0.1, 0.01, -0.2]

pred = [pred]

pred = torch.tensor(pred, dtype=torch.float)

print_(f"pred={pred}")

target = 0

target = [target]

target = torch.tensor(target, dtype=torch.long)

print_(f"target={target}")

loss = nn.CrossEntropyLoss()(pred, target)

print_(f"loss={loss}")

correct = pred.argmax(1) == target

correct = correct.item()

print_(f"correct={correct}")

pred = tensor([[ 0.4000, 0.1000, 0.0100, -0.2000]])

target = tensor([0])

loss = 1.0874457359313965

correct = True

pred = [0.4, 0.1, 0.01, -0.2]

pred = [pred]

pred = torch.tensor(pred, dtype=torch.float)

print_(f"pred={pred}")

target = 0

target = [target]

target = torch.tensor(target, dtype=torch.long)

print_(f"target={target}")

loss = nn.CrossEntropyLoss()(pred, target)

print_(f"loss={loss}")

correct = pred.argmax(axis=1) == target

correct = correct.item()

print_(f"correct={correct}")

pred = tensor([[ 0.4000, 0.1000, 0.0100, -0.2000]])

target = tensor([0])

loss = 1.0874457359313965

correct = True

pred = [[0.4, 0.1, 0.01, -0.2], [0.4, 0.1, 0.01, -0.2]]

pred = torch.tensor(pred, dtype=torch.float)

print_(f"pred={pred}")

target = [0, 3]

target = torch.tensor(target, dtype=torch.long)

print_(f"target={target}")

loss = nn.CrossEntropyLoss()(pred, target)

print_(f"loss={loss}")

correct = pred.argmax(axis=1) == target

print_(f"correct={correct}")

correct = correct.type(torch.float)

print_(f"correct={correct}")

correct = correct.sum()

print_(f"correct={correct}")

correct = correct.item()

print_(f"correct={correct}")

pred = tensor([[ 0.4000, 0.1000, 0.0100, -0.2000], [ 0.4000, 0.1000, 0.0100, -0.2000]])

target = tensor([0, 3])

loss = 1.3874456882476807

correct = tensor([ True, False])

correct = tensor([1., 0.])

correct = 1.0

correct = 1.0

def train(dataloader, model, loss_fn, optimizer):

num_batches = len(test_dataloader)

size = len(dataloader.dataset) # 60000

model = model.to(device)

model.train()

batch_loss, total_correct = 0., 0.

for batch, (X, y) in enumerate(tqdm(dataloader)):

X, y = X.to(device), y.to(device)

# Compute prediction error

pred = model(X)

loss = loss_fn(pred, y)

# Backpropogation

optimizer.zero_grad()

loss.backward()

optimizer.step()

batch_loss += loss.item()

correct = (pred.argmax(axis=1) == y)

correct = correct.type(torch.float)

correct = correct.sum()

correct = correct.item()

total_correct += correct

total_correct /= size

batch_loss /= num_batches

msg = (f"Train_Accuracy = {total_correct:0.1%}{tab}"

f"Train_Loss = {batch_loss:.3f}")

print_(msg)

# Train One Epoch

train(train_dataloader, model, loss_fn, optimizer)

100%|██████████| 938/938 [00:03<00:00, 259.91it/s]

Train_Accuracy = 27.4% Train_Loss = 13.416

# evaluate model:

model.eval() # turnoff dropout and batchnorm layer

with torch.no_grad(): # no grad computation

#out_data = model(data)

pass

len(test_dataloader.dataset),

(10000,)

def test(dataloader, model, loss_fn):

num_batches = len(test_dataloader)

size = len(dataloader.dataset) # 10000

model.eval()

batch_loss, total_correct = 0, 0

with torch.no_grad():

for X, y in tqdm(dataloader):

X, y = X.to(device), y.to(device)

pred = model(X)

loss = loss_fn(pred, y).item()

batch_loss += loss

correct = (pred.argmax(1) == y)

correct = correct.type(torch.float)

correct = correct.sum()

correct = correct.item()

total_correct += correct

batch_loss /= num_batches

total_correct /= size

msg = (f"Test_Accuracy={total_correct:0.1%}{tab}"

f"Test_Loss={batch_loss:.3f}")

print_(msg)

# Test One Epoch

test(test_dataloader, model, loss_fn)

100%|██████████| 157/157 [00:00<00:00, 301.22it/s]

Test_Accuracy = 49.1% Test_Loss = 2.172

epochs = 5

for t in range(epochs):

print(f"Epoch {t+1}\n-------------------------------")

time.sleep(1)

train(train_dataloader, model, loss_fn, optimizer)

test(test_dataloader, model, loss_fn)

print("Done!")

Epoch 1

-------------------------------

100%|██████████| 938/938 [00:03<00:00, 259.85it/s]

Train_Accuracy = 54.6% Train_Loss = 12.312

100%|██████████| 157/157 [00:00<00:00, 297.65it/s]

Test_Accuracy = 58.2% Test_Loss = 1.924

Epoch 2

-------------------------------

100%|██████████| 938/938 [00:03<00:00, 260.97it/s]

Train_Accuracy = 58.9% Train_Loss = 10.379

100%|██████████| 157/157 [00:00<00:00, 299.70it/s]

Test_Accuracy = 60.1% Test_Loss = 1.556

Epoch 3

-------------------------------

100%|██████████| 938/938 [00:03<00:00, 260.62it/s]

Train_Accuracy = 62.1% Train_Loss = 8.360

100%|██████████| 157/157 [00:00<00:00, 299.20it/s]

Test_Accuracy = 63.0% Test_Loss = 1.275

Epoch 4

-------------------------------

100%|██████████| 938/938 [00:03<00:00, 260.91it/s]

Train_Accuracy = 64.6% Train_Loss = 7.014

100%|██████████| 157/157 [00:00<00:00, 297.97it/s]

Test_Accuracy = 64.4% Test_Loss = 1.102

Epoch 5

-------------------------------

100%|██████████| 938/938 [00:03<00:00, 261.41it/s]

Train_Accuracy = 66.0% Train_Loss = 6.171

100%|██████████| 157/157 [00:00<00:00, 299.42it/s]

Test_Accuracy = 65.2% Test_Loss = 0.990

Done!

# Saving Model

model_dir = os.path.join(home, "models")

os.makedirs(model_dir, exist_ok=True)

model_pth = os.path.join(model_dir, "model.pth")

torch.save(model.state_dict(), model_pth)

print_(f"Saved PyTorch Model State to {model_pth}")

Saved PyTorch Model State to /home/naneja/datasets/n/FashionMNIST/models/model.pth

# Load Model

model = NeuralNetwork()

model.load_state_dict(torch.load(model_pth));

model = model.to(device)

test(test_dataloader, model, loss_fn)

100%|██████████| 157/157 [00:00<00:00, 297.76it/s]

Test_Accuracy = 65.2% Test_Loss = 0.991

model.eval()

model = model.to("cpu")

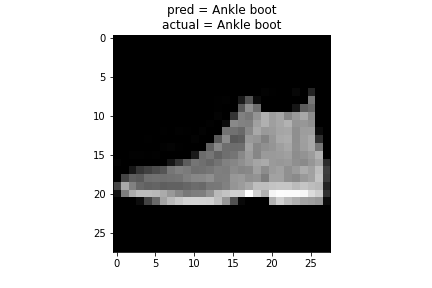

X, y = test_data[0][0], test_data[0][1]

print_(f"X.shape={X.shape}")

with torch.no_grad():

pred = model(X)

#print(pred)

pred = pred[0].argmax(axis=0)

#print(pred)

pred = Labels[pred.item()]

actual = Labels[y]

img = X[0]

plt.imshow(img, cmap="gray");

plt.title(f"pred = {pred}\nactual = {actual}");

img_name = get_img_name(lesson)

plt.savefig(img_name)

insert_image(img_name, topic)

X.shape = torch.Size([1, 28, 28])