Behavior-Cloning

Published:

In this project, I implemented convolutional neural network to clone driving behavior using Keras. The model outputs a steering angle to an autonomous vehicle. Final project video is available here

Project Resources

Project Goals

- Use the simulator to collect data of good driving behavior

- Build, a convolution neural network in Keras that predicts steering angles from images

- Train and validate the model with a training and validation set

- Test that the model successfully drives around track one without leaving the road

Model Architecture

def nv_model2():

model = Sequential()

model.add(Lambda(function=f, input_shape=input_shape))

model.add(Convolution2D(filters=64, kernel_size=(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2,2), strides=(1,1)))

model.add(Convolution2D(filters=64, kernel_size=(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2,2), strides=(1,1)))

model.add(Convolution2D(filters=64, kernel_size=(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2,2), strides=(1,1)))

model.add(Convolution2D(filters=68, kernel_size=(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2,2), strides=(1,1)))

model.add(Convolution2D(filters=36, kernel_size=(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2,2), strides=(1,1)))

model.add(Dropout(0.2))

model.add(Flatten())

model.add(Dense(1164))

model.add(Activation('relu'))

model.add(Dropout(0.2))

model.add(Dense(100))

model.add(Activation('relu'))

model.add(Dense(50))

model.add(Activation('relu'))

model.add(Dense(10))

model.add(Activation('relu'))

model.add(Dense(1))

return model

Training Approach

I used the default dataset provided by Udacity. I separated the dataset into following three categories:

- Images from Center Camera

- Images from Left Camera

- Images from Right Camera

Images from Center Camera was first used to create training set and valid set in the ratio of 0.8: 0.2

I then analyzed the training images (Center Camera Images) in the following three categories:

- Driving mostly straight:

- Images with -0.15 <= angle <= 0.15

- Driving right to left

- Image with angle < -0.15

- Driving left to right

- Image with angle > 0.15

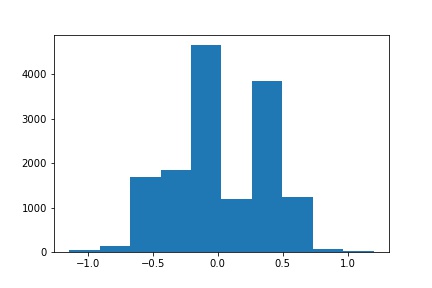

The training dataset was highly skewed with large number of images with driving straight and less number of images with driving left or right. Since, so far I considered only center camera images, I decided to add additional images from left and right camera.

In order to add images, I sampled few numbers (more than what was required to balance the images) from original dataset and checked the steering angle. Based on the value of steering angle, the image from either left camera or right camera with angle adjustment of 0.2 was added in to training dataset as below:

- Steering angle of less than -0.15 implies the car is turning towards left, so the image from right camera with angle adjustment was added

- Steering angle of greater than 0.15 implies the car is turning towards right, so the image from left camera with angle adjustment was added

Below images shows the histogram of steering angles of the training dataset after adding additional images.

Generator

In the generator, I trimmed all images so that top 30 % and below 10 % of image is not considered. After cropping the image, I resized the image to (64, 64, 3).

For training phase, I generated a random number between 0 to 3 and applied one of transformations based on the random number. The set of transformations comprised random rotation in range of (-15,15), brighting image, flipping image, unchanged.

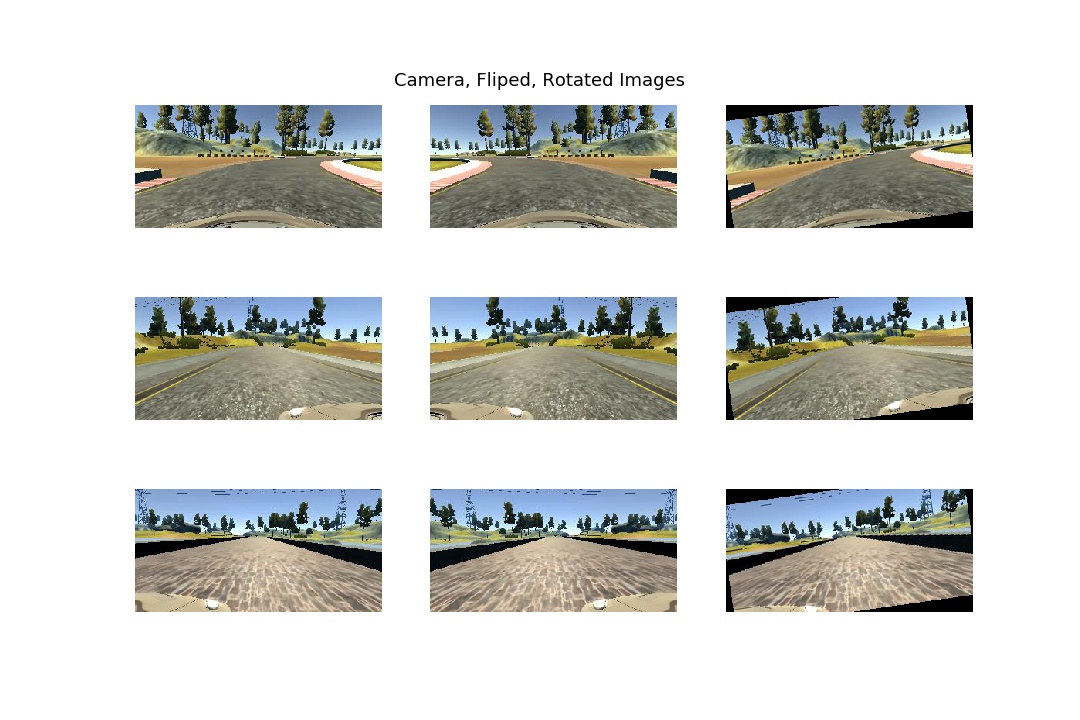

Below image represents flipping and rotation on the camera images.

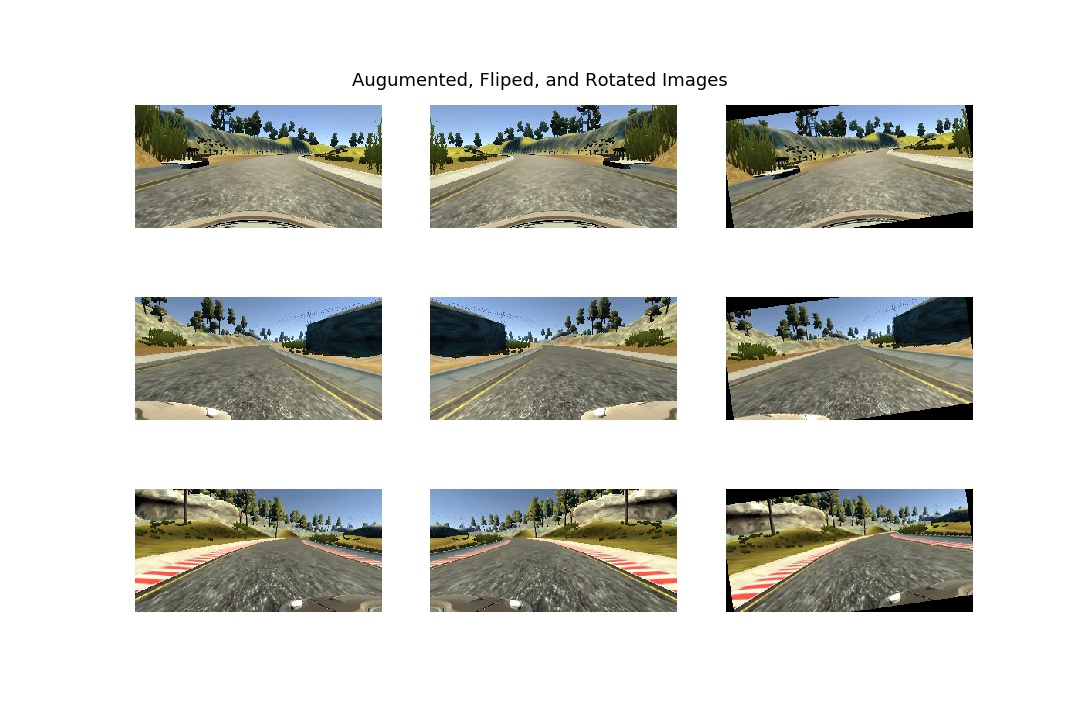

Below image shows flipping and rotation transformation applied on the few images from straight driving, left driving, right driving.

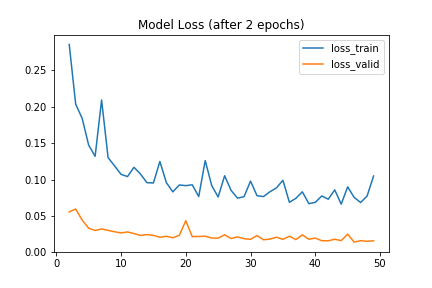

The ideal number of epochs was 50 as evidenced by below figure showing model loss. I used an adam optimizer so that manually training the learning rate wasn’t necessary.

The model loss for 50 epochs has been shown except loss from initial two epochs since the loss of initial epochs was higher.

Video Output

The final video output is available at here